"Blackbox" Systems Management

Some abstractions have a rare combination of generality and power, meaning:

- They can be applied to a lot of different situations

- They provide useful insight that allows you diagnose current performance and make changes for the better

One of those abstractions is the "business process blackbox". As far as I know, this was first put forward by Andy Grove in High Output Management (totally worth a read!). However it was just something that had already seeped in to my mind before I read it. It was published in 1983, and by the time I started my MBA in 2002, the concepts were already "out there".

The concept behind a the blackbox model is that the business process can be anything--anything at all--and still be measured and managed according similar performance characteristics. In my own professional career, the areas include:

- Managing software feature development

- Recruiting product managers to write product specifications

- Investing in capacity for a wireless ISP

- Building a team to do user analytics investigations fo r

- Sizing a finance team to conduct month-end and year-end closes

- Projecting B2C customer acquisition

- Database development

- Re-organizing teams that product creative content for marketing campaigns

- Doing some amateur traffic pattern analysis when I'm stuck on the 405

As the list above shows, this abstraction can be applied to just about any activity.

What are the key metrics for the blackbox? There are four:

- Quality

- Bandwidth

- Latency

- Cost

Quality and cost usually require bespoke metrics to capture the underlying process. Bandwidth and latency, though, are nearly universal. I'll dig into those two here.

Bandwidth

Bandwidth

Bandwidth is the total output of a system per unit time. When possible, you want measure the output at the "exit" of the blackbox. If that's not possible, you can measure the bandwidth at any representative point along the way--but if you misjudge how "representative" that point is, you'll lose the ability to predict and control the system.

For example, a garden hose has a higher bandwidth than drinking straw. A fire hose has yet more. The Amazon river has even more. You measure the bandwidth for each of these "water channels" at the place where the water leaves the channel.

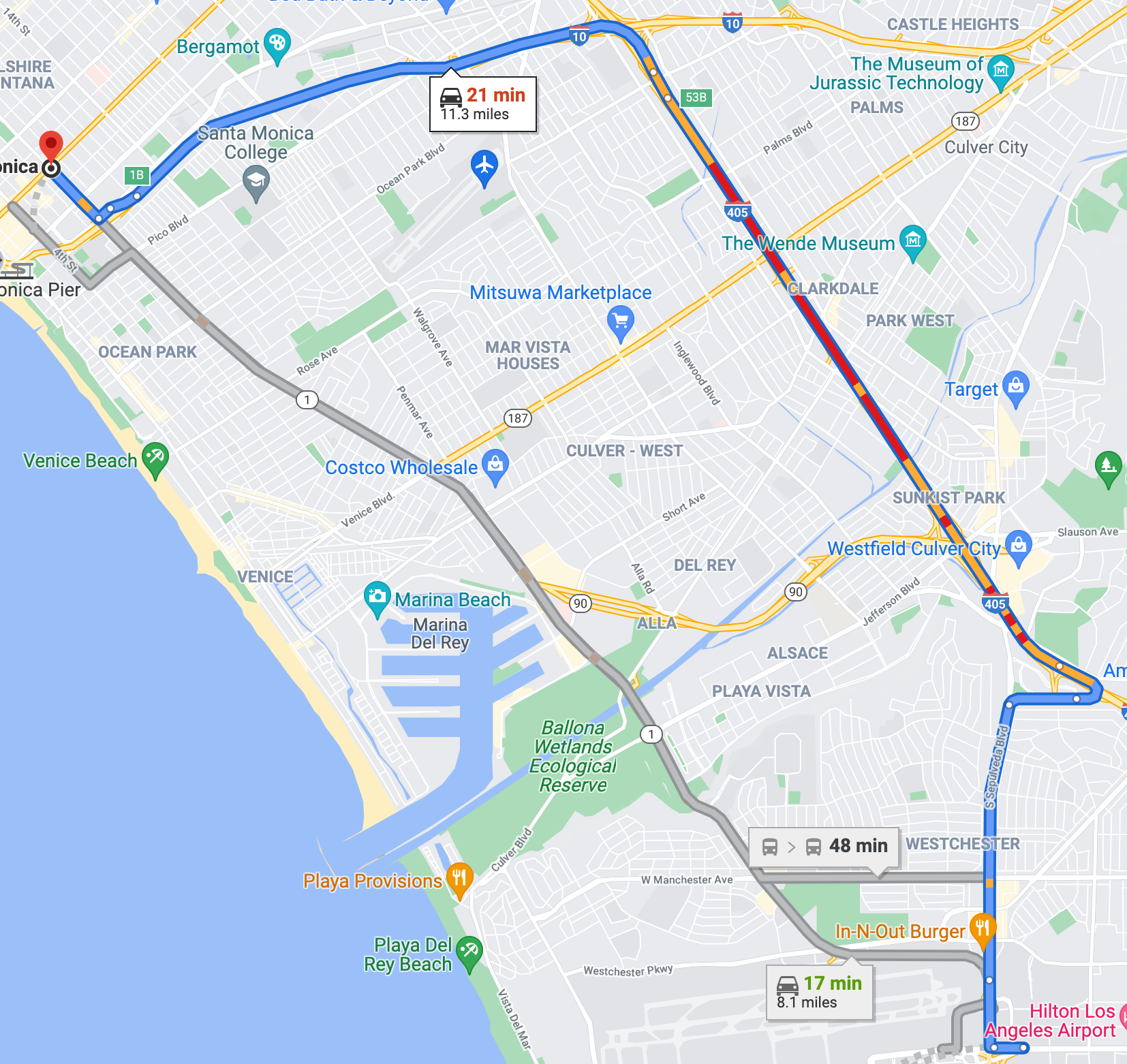

Another example would be a road system. Let's imagine you're trying to get from LAX to Santa Monica. Here are some options:

Notice that there are multiple paths--and because it's a road system, there's no single point of "exit". Some people on the 405 North will be going to Santa Monica right alongside you. Some drive past to points further north. Some will be taking the 405 to get the 10E and head points east. Where do you measure the output?

Ideally, you want to measure the output at every segment of road (however you define segment) and then compare. You'll find bottlenecks that will then determine the output of the entire system--despite that the fact that the system is not a straight line and people are using it in different ways. (Also remember, the measurement is how many cars pass by per hour.)

In practice, measuring bandwidth is a crazy hard problem. However, the concept is simple enough to let you apply it to day to day problems and get surprisingly effective results.

Latency

Latency is the time it takes a single unit of work to be done by the system, start to finish.

Most people only kind of get latency when talking about water, bits, electrons, or whatever. In our water example, it's the time it takes for a single molecule of water to get through the drinking straw, through the fire hose, or from the mountains to the mouth of the Amazon river. We're not water molecules, so the example is hard to relate to.

However, we have lived experience of it every day when it comes to traffic. When it comes to cars on road, I couldn't care less how many cars per hour the route supports. The only thing I care about is: am I going to be late?

The answer to depends on the travel time from the starting point to the destination--which is exactly what latency is.

The Fundamental Problem of Systems Management

When managing the performance, bandwidth and latency are very different.

While you can increase the bandwidth of a system by increasing the speed that the jobs get completed (think having the cars zoom down the 405 at 200 mph), this usually runs into limits quite quickly. Drivers can only handle so much speed. Water faces resistance that slows it down. Even bits are limited by the speed of light (which doesn't sound like it would be much of a limit--but it is).

While making things run faster might double, triple, or even 10x a system's bandwidth, the real gains from parallelization--doing multiple jobs at the same time.

Parallelization is why the bandwidth of the Amazon river carries 552 million garden hoses worth of water. Makes 10x sound like nothing, right? That's the power of parallelization in increasing bandwidth.

However, parallelization comes with costs.

Let's get a bit more concrete. Here are some basic systems and their parallelized counterparts:

- garden hose -> Amazon river

- 120v electrical socket -> high voltage power lines

- transistor -> central processing unit (CPU)

- telephone wire -> fiber optic cable

- person -> corporation

Notice that, except for the Amazon river (which isn't a man-made instance of parallelization), each parallelized counterpart is a lot more complex. Even the power line example involves a lot of specialized equipment to make the parallelization happen (and then undo it).

What the remaining three examples have in common is that they involve some form of information transmission or processing capability. In these types of systems--unlike with water or electrons--it matters which information get transmitted and which calculation gets processed. That requires coordinating the work of the parallel "workers".

Coordination incurs significant overhead. That overhead--generally--results in increased latency. That is a the fundamental problem in systems management, whether we're talking about computers or corporations.

To recap:

- To increase bandwidth, you parallelize

- To parallelize information or processing based systems, you have to coordinate

- Coordination = overhead

- The overhead causes higher latency, which causes other problems

Consequences of Higher Latency

Systems with higher latency require more time to turn new inputs into new output. That makes them less responsive to change--less agile. The wait times can cause high levels of waste and also frustrate people who are waiting on the output of the system.

Imagine Netflix launched its streaming video service, but instead of starting to play new videos within a few seconds, you made your selection and had to wait a whole week for the video to start to play. You'd be sitting there on Saturday, trying to decide what you wanted to watch the following Saturday. Obviously, no one would put up with this--but let's work through the consequences.

- You'd have to make your choice and stick to it because the costs of changing your mind are so high

- If anything changed in your schedule, you'd miss your movie

- If a new release came out mid-week, you'd be unable to do anything about it

All of this would make the act of selecting a movie really stressful and just not fun. At that point, you'd rather just drive down to Blockbuster and spend an hour wandering the aisles to pick out a DVD in person. That's how horrible high latency is. It makes this look like the "good" option:

This sounds ridiculous in this setting--but this is the natural state of affairs for most business processes. Here are the general latencies required for different system outputs:

- Software features: weeks to months

- Consumer goods manufacturing: months

- New airplanes: years

- New power plants: decades

But as the success of Netflix and fast-fashion companies like Zara show--low latency opens up a whole new world of possibilities.

In fact, when there's competition at play, high latency = death. Just rabbits that take 5 minutes to react to the presence of a coyote won't make it too far in life, high latency systems will find themselves hopelessly ineffective in changing environments.

The only times high latency systems survive is when all players in the industry have the same high latency--but it's still really hard to work with them. In some cases, groups might try to mandate interaction with their high latency systems (think government or other legal systems) instead of lowering the latency to acceptable levels. These deserve all the disdain they engender.

Next up, dig into The Drivers of Latency in Business.